REPO LINK HERE Large language models (LLMs) have shown remarkable capabilities in factual question answering in recent years, but they sometimes give the answer with hallucinations — responses that seem to be the correct answer but in fact incorrect. Hallucinations give significant challenges for deploying LLMs in reliable medical knowledge queries, which require a high degree of confidence in the resulting output. This study followed the ideas from the paper “On Early Detection of Hallucinations in Factual Question Answering”. A multi-turn recursive framework for detecting and mitigating hallucinations in large language models (LLM) responses to factual questions was implemented using the Open-llama-7B model. The pipeline leverages a trained classification models guide a language model in its recursive generation. To reduce semantic entropy in the resulting output, a sensitivity hint prefix prompting method was adopted to reduce the hallucination rate in the TriviaQA dataset. Overall, a recursive generation pipeline with a known prior of whether an answer is hallucinating or not shows promising results. The next phase of the project involves adapting the same architecture for medical Q&A datasets for improved generative response of medical LLMs.

May 1, 2025

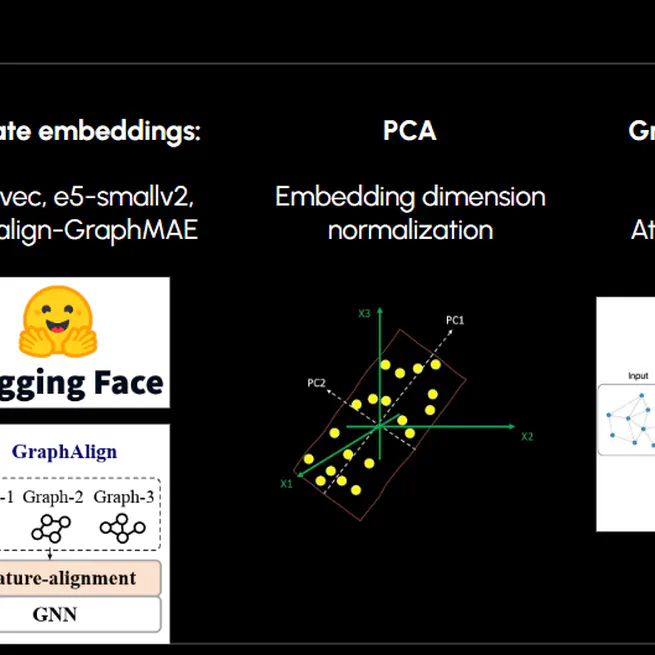

REPO LINK HERE Scaling and generalizing cross-domain tasks amongst graph datasets remains a challenge due to the variability in node features, edge-based relationships, and the inherit challenges for transfer-learning amongst graphs. The aim of this project is to explore the capabilities of using language embeddings to achieve task generalizations across different graph structures and build models that could learn cross-domain relationships. By evaluating the performance of trained graph neural networks across different language embeddings, the authors evaluate the effectiveness of various encoding architectures. Contrary to expectations, the simpler word2vec achieved greater performance compared to the E5-Small-V2 and GraphAlign pre-trained embeddings. Finally, the author discusses limitations and the conclusiveness of the study and discusses future research directions in unifying cross-domain graphs with scalable architecture.

May 1, 2025

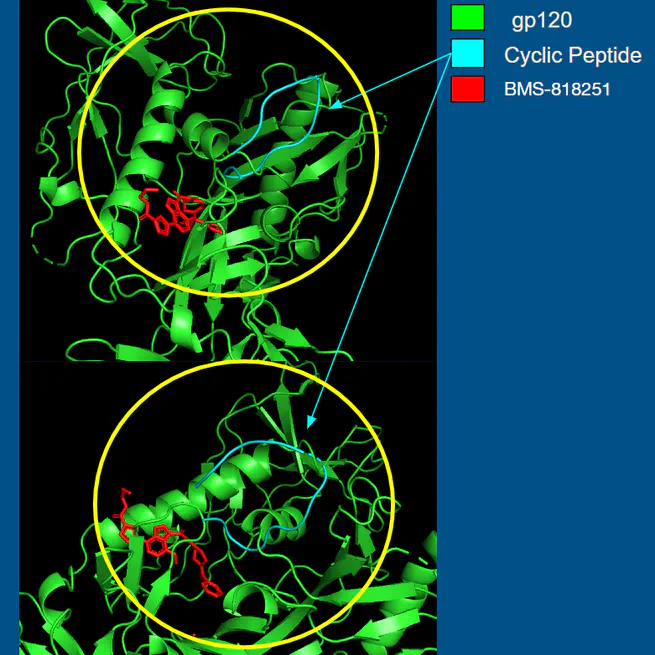

PAPER This independent research study conducted a series of investigations to enhance the precision of cyclic peptide generation targeting the HIV gp120 trimer. The methods included proximity mapping to focus on the CD4 binding site, centroid distance penalization, generative loss tuning, and the development of custom generative functions. By synthesizing these findings, a novel methodology was implemented to generate candidate cyclic peptides of varying lengths. This process successfully produced cyclic peptides that resemble the crystal structure of CD4 attachment inhibitor (BMS-818251 molecule). This new methodology demonstrated improved control and precision in the generation of compounds, thereby enhancing the applicability of AlphaFold in the drug discovery process.

Jan 30, 2025

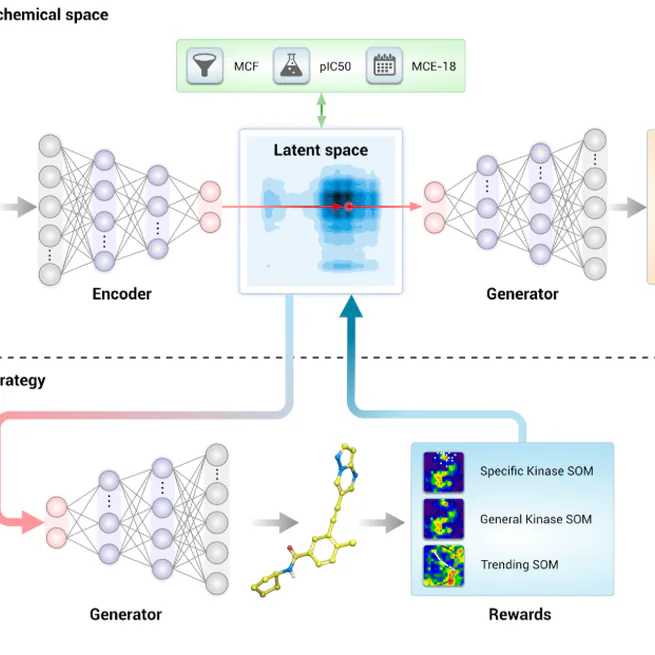

Generative Drug-Discovery using Adversarial Auto-Generative Encoders

Sep 25, 2023