AlbumGen: An exploration into Multimodality with LLMs

AlbumGen

AlbumGenAbstract

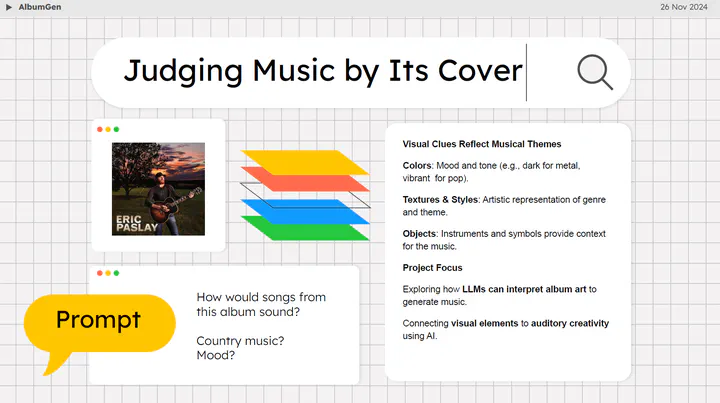

This project explores the intersection of computer vision and music generation by utilizing image captioning models on album cover art and employing text-to-music generation models, such as MusicGen, to create music based on these captions. By training a model to generate descriptive captions from album covers, we aim to develop a system that automatically produces music aligned with the visual themes of album artwork. This innovative approach opens new avenues for creative AI-assisted music production.

Type

Publication

AlbumGen - Image-to-Music Generation with Textual Intermediaries

Full Text of paper here. Presentation of AlbumGen with Demo here.

AlbumGen explores generating music from album cover art using a multi-step pipeline that incorporates image captioning, text-to-music generation, and explainable intermediaries. The aim is to connect the visual themes of album art with music creation, leveraging advances in computer vision and large language models. This project aims to explore the development of an image-to-music pipeline with LLMs. Rather than delving into philosophical interpretations of how music should be conditioned on image inputs, we strive to ground our pipeline on explainable intermediaries in the generation process through text.

Demo

Some demos can be found here