Cross-domain task generalization of graphs with language embeddings

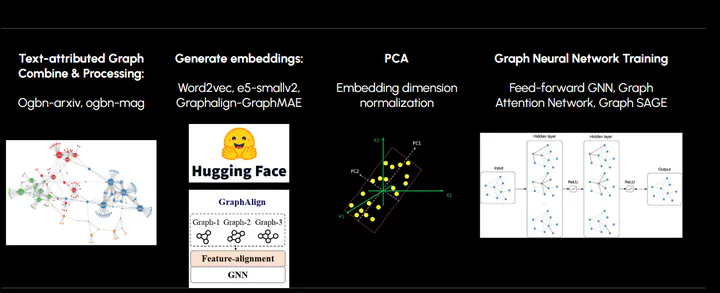

Scaling and generalizing cross-domain tasks amongst graph datasets remains a challenge due to the variability in node features, edge-based relationships, and the inherit challenges for transfer-learning amongst graphs. The aim of this project is to explore the capabilities of using language embeddings to achieve task generalizations across different graph structures and build models that could learn cross-domain relationships. By evaluating the performance of trained graph neural networks across different language embeddings, the authors evaluate the effectiveness of various encoding architectures. Contrary to expectations, the simpler word2vec achieved greater performance compared to the E5-Small-V2 and GraphAlign pre-trained embeddings. Finally, the author discusses limitations and the conclusiveness of the study and discusses future research directions in unifying cross-domain graphs with scalable architecture.